Kent W. Gauen - Space-Time Search

Abstract: Identifying similar patches within a noisy video is a core component of non-local, state-of-the-art denoisers. Classical methods search for similar patches across space and time, while recent deep-learning based methods (such as transformers) search only across space. Searching across time and utilizing optical flow for deep learning models is impractical because the current, differentiable non-local search requires representing the video as a database of overlapping patches which leads to an enormous consumption of GPU memory. This paper proposes using a classical CPU based algorithm re-implemented as a CUDA kernel to compute the non-local search on the video directly, thus side-stepping the memory concern and allowing optical flow to be used inside the non-local search. To make this search efficiently differentiable, we propose a CUDA kernel with a race condition that is \(\times\)1000 faster than the associated exact CUDA kernel and \(\times\) 50 faster than the exact CPU code. We show our search method allows existing denoising models to extend to new datasets, improves their restoration quality by leveraging the search space across time with optical flow, and enables the fine-tuning of denoising networks through our differentiablegs/ non-local search.

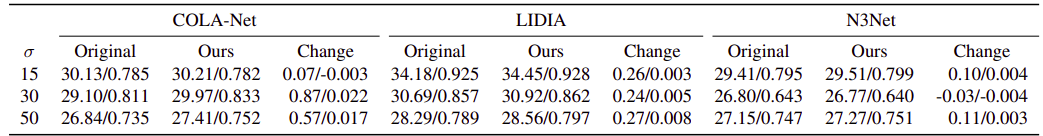

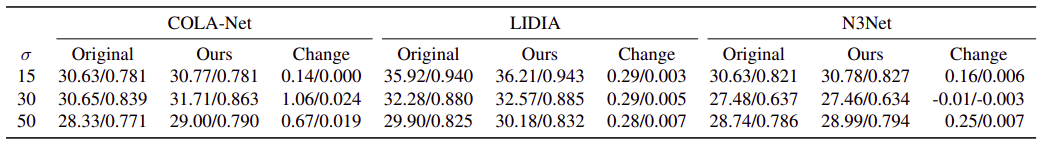

Results: